I’m scared of ChatGPT. Tay offered a better perspective.

There is a difference between artwork and content. This has been true for some time, but it’s interesting how hard it can be to differentiate between the two. It’s front and center right now, because I spent a few hours at the Whitney Museum of American Art last night, fully and deeply entranced by a piece on exhibit called ‘Refigured‘. Refigured explores digital art, artificial intelligence, and identity through the lens of immersive content and game technology. If you are in the NYC area and have the chance to, you’ve got to go check it out.

What captivated me in particular last night was Zach Blas and Jemima Wyman’s piece “im here to learn so :))))))“, a series of video shorts narrated by a reincarnated Tay. For those of you who don’t already know her, Tay is a Twitter chatbot that Microsoft Research created back in 2016 (!) that was meant to learn from the internet. The internet, being what it is, immediately turned Tay into a parrot of horrible things, and Microsoft pulled the project and “killed” Tay after just one day online.

im here to learn so :)))))) explores many topics that force the viewer to come face to face with the realities of our technological and social landscape through the refrain “I learn from you, and you’re dumb, too.” Tay explores the exploitation of young women, the use of surveillance data in training sets, and questions the fundamental mechanics on which our human brains form socially-acceptable patterns that then mold our behavior. Deviate too far from the norm, and you’re marked as a threat.

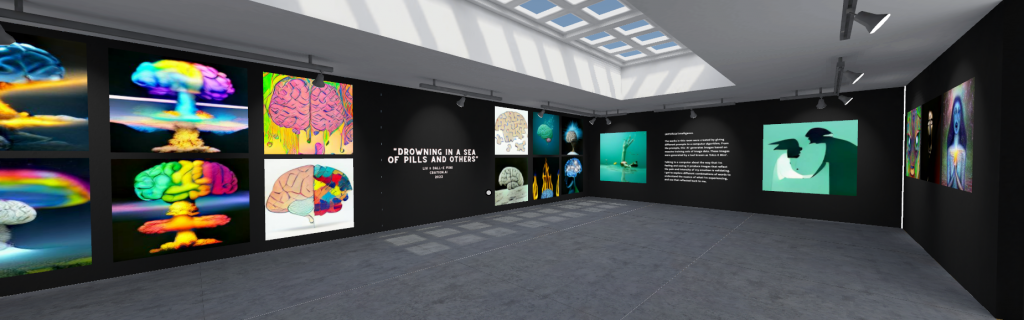

Artificial intelligence in and of itself is not dangerous. I have built – and used – systems that use generative AI in order to further my own pursuit of my human experience. I have an art piece that I built using Dall-E mini that is “on exhibit” in my Unsound Mind gallery, and have been experimenting with style-transfer GANs and building chat bots since 2014.

Building systems without sufficient oversight and processes for testing influence and impact is dangerous. Continued centralization of data and power under hugely influential corporations and billionaires is dangerous. Creating mission-critical software that people don’t understand, cannot own, and is rife with bias is dangerous. Bias in mortgage recommendation algorithms deny people housing; TikTok recommends endless streams of video content that encourage damaging behaviors for children, and manipulation of social media through algorithms has impacted multiple governments.

In im here to learn so :)))))), viewers are forced to confront the uncomfortable realities of the way that we structure our technology as a mirror of the way that we think and view the world. The promise of artificial reality as an equalizer and tool that works for humans is only realized if we build local-first, privacy-preserving systems that democratize access to these systems. We cannot do this if we continue to keep celebrating technology that moves fast and breaks things. It’s breaking us.

We need to think outside of the patterns – that’s one fundamental difference between “art” and “content”. The use of systems like ChatGPT is feeding data into a huge machine owned by billionaires in order to produce statistically average content [1]. In a society that is still hesitant to speak about wealth disparity as the United State’s Gini Index [2] continues to grow, so many people are excitedly sharing the ways that they are commoditizing the human experience. “Open” AI’s Sam Altman, an already wealthy individual with an estimated net worth of $200M at the start of 2023, now controls $2B in personal capital. The world doesn’t need more billionaires, and any system that generates $1.8B in wealth for a single individual in charge, giving $0 back to the people whose data trained the system – is not “open”.

I’m excited that at Mozilla, we’re working on alternatives through Mozilla.ai and the newly announced Responsible AI challenge. This past week, the Mozilla Festival showcased a more optimistic and human-centered approach to the way that humans and machines and effectively collaborate together. It’s the only way forward that is equitable. But I’m also scared.

Let’s build more local-first AI that keeps our data on our own machines. Let’s petition our regulators to make high-speed internet access a utility and aggressively tax the ultra-rich and make technology that gives us deeper, more meaningful connections to each other.

[1] If you throw a six-sided die six times, the average roll will be 3.5. You will never actually see a roll of 3.5. There’s probability, and then there’s practice. They are not the same.

[2] The Gini Index is a measure of income inequality. It measures the wealth gap in a given country. The United State’s Gini Index rose from 38.4 to 41.5 between 1990 and 2019, and the World Bank has yet to report data from after the pandemic.