Resistance to Change is Often a Lack of Clarity

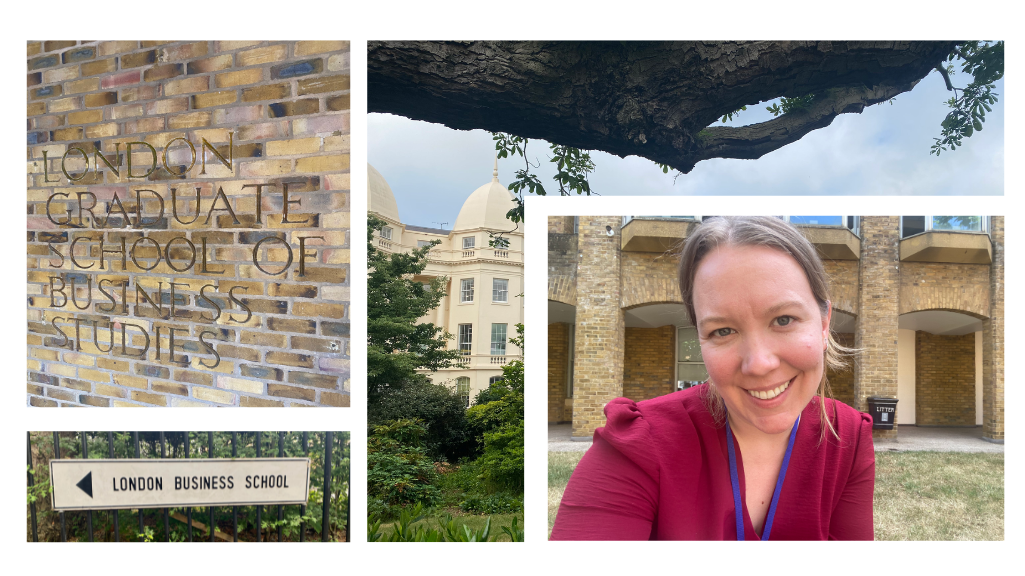

I’m on exchange at London Business School this week to study Strategic Innovation. Today, we covered a lot of ground, starting with why it is challenging for established organizations to truly innovate, as well as the individual thought patterns that challenge us in thinking “outside of the box”. And speaking of thinking outside the box, we also touched on communication (and why it’s so freaking hard to do it well). As it turns out, resistance to change is often a lack of clarity, more than it is an actual resistance to trying something new, and the ambiguity that begets creative thinking – and subsequently, innovation – often comes from a number of conflicts between alignment at an organizational and individual level.

“What often looks like resistance to change is often a lack of clarity.”

Jessica Spungin

Asking for change is hard. Professor Spungin, the LBS adjunct professor teaching the course, explained that the reason that change can be so difficult is that there is an imbalance between the perceived costs of change and the perceived benefits of the change. There are also challenges in how leaders at organization communicate: we think that people share our baseline understanding and motivations, when in reality, there is often a distortion of information and simply different perspectives.

The course is largely focused on innovation of new business models, grounded in the perspective of larger organizations who are focused on introducing this kind of strategic innovation into their firms. However, we also did a brainstorming exercise to explore alternative forms of innovation, including things like:

- New product features

- Technological or digital transformation

- Process and organizational change

- Distribution and pricing changes

I’ve recently been musing quite a bit on whether or not product and innovation can co-exist within the same team, after being prompted to reflect on the answer at this year’s SXSW conference. While I’m still not certain of an answer, I can’t help but feel that my optimism (naivety?) is that they can, but it requires a very particular approach to both. I’m excited for the rest of this week, where I can continue to explore those tensions to better shape my own thoughts on the matter.

Continuing through the day, we covered a bit about how the tension between established companies and innovation is grounded in the fact that innovation – by its definition – is disruptive. I often joke that I’m the “rebellious teenager” of the teams that I’m on, because it seems like I *always* have a different view from the one that I’m expected to. When we got to the professors’ list of five ways to be creative – be paranoid, be angry, be a thief, be connected, and be real – a lot of my personality traits made a lot more sense to me as to why I’ve ended up where I have in my work.

As our first guest speaker later pointed out:

“The only people who are going to innovate are the people who don’t have anything to lose by pushing for change.”

Paolo Cuomo

Of course, most established organizations don’t innovative effectively. We’ve been taught (at least in western society) that we must grow infinitely. I learned today that, upon Amazon’s first profitable year, Jeff Bezos actually apologized to shareholders. Profit means a lack of re-investment.

One of the things that I still deeply believe that we can innovate on is capitalism. So much of my time in business school so far as hand-waved away the fact that uncontrolled growth at all expense is unsustainable. When cells in the human body do it, it’s cancer. When businesses do it, it’s desirable – unless they go a tiny bit too far and become monopolies, but even that appears to be up for debate these days, at least in the US. It’s distressing to me how often I hear people shrug and say “it’s better than everything else we’ve tried.

As I’ve been working my way through a survey of existentialist and phenomenological philosophy, I’ve also been getting a bit of a history lesson related to the polarization of socialist / communist policies vs. imperial / capitalist ideologies that emerged from WWII. It serves as a reminder that our behaviors, priorities, and appetite for risk/change is deeply rooted in the socioeconomic structure that we are a part of.

Emphasized throughout today’s course was also the human tendency to reject information that doesn’t fit our existing mental models of the world around us. In the larger conversation around machine learning and artificial intelligence – or rather, the re-ignited debate on what it means to be intelligent – this point feels especially critical to consider.

Often, people discuss AI in an anthropomorphic manner. When the words that AI-based chat bots produce are incorrect, it’s often referred to as a “hallucination”. Let’s pretend, for a moment, that we can accurately describe the experience a computer is having when it generates an answer through our human language. Even in this case, an AI is never hallucinating: a hallucination is, according to the Cleveland Clinic, “a false perception of objects or events involving your senses: sight, sound, smell, touch and taste.” A large language model is not hallucinating, because it’s not getting conflicting stimuli from senses.

Even if we presume to call its interface with a human via a chat interface a “sense”, or the action of running an ingestion program to train on new information a “sense”, a large language model has no way of distinguishing fact from fiction in its output. Each experience is equally as sensical as the previous one, regardless of whether or not the meaning ascribed to it by a human is considered true or false. Sure, it can “fact-check” itself against the internet now, but unlike a human hallucination, it isn’t making it up completely on its own.

When humans do what an AI does (make up incorrect information), it’s called lying. It’s not called “hallucinating”. A hallucination is also not inherently believed – if you’ve ever had open-eye hallucinations while on a psychedelic substance, you may be familiar with the sensation of knowing that you are seeing new patterns that didn’t exist previously. But I digress a bit too far, at least for today.

Humans reject information that don’t fit their mental model of the world, but one key consideration is that there are many different variations of “ground truth” that do not need to be so filled with fear. When we consider the challenges of facing ambiguity as it relates to innovation, we must do so with a larger recognition of not just the firm’s own priorities and constraints, but the overarching ones that we’ve been born into.

One thing that I deeply appreciated about today’s class (and there were many, but I’ll choose to call attention to this particular one here) is the reminder that one source of creativity can be to look beyond our regular fields of endeavor. It is why I find it critical to write not just about technology, but about how learning about Sartre, Beauvoir, and Merleau-Ponty influence my thinking. Of particular note for me today was considering the evolutionary beauty of mycorrhizal networks and participatory mycelium, and how we might use more of those metaphors to re-consider how we build and participate in the internet.

Another concept that I’ll be considering is how to explicitly explore and differentiate between divergent and convergent thinking. Divergent thinking is what facilitates ideation, while convergent thinking begets decision-making. Innovation requires both, and yet we rarely call attention to which we are doing at a given time. The emergence of the design-product-engineer pattern in software development subsequently makes even more sense to me through this framing, though I can’t help but wonder if we’re approaching an era where job titles and descriptions, at least in some disciplines, shift from particular skills and into particular styles of thinking.

Divergent indeed.