Is the Singularity already here?

I’ve had a hypothesis for several years now that we’re already well within the fabled technological singularity. For a refresher, the idea of the singularity is, summarized by Wikipedia:

a hypothetical future point in time at which technological growth becomes uncontrollable and irreversible, resulting in unforeseeable consequences for Human civilization.[2][3] According to the most popular version of the singularity hypothesis, I. J. Good‘s intelligence explosion model of 1965, an upgradable intelligent agent could eventually enter a positive feedback loop of self-improvement cycles, each successive; and more intelligent generation appearing more and more rapidly, causing a rapid increase (“explosion”) in intelligence which would ultimately result in a powerful superintelligence, qualitatively far surpassing all human intelligence.

Technological singularity, Wikipedia, accessed 30 Aug 2024

Several interpretations of the technological singularity focus on a moment where humans merge with machines, and this explosion of super-intelligence is often spoken about in the context of AGI today. The quest for a super-intelligent, self-modifying computer is openly promoted in Silicon Valley. Billions of dollars and endless ecological damage is being poured into the pockets of a handful of companies with the capital to train increasingly large models that aim to replicate – and then surpass – human intelligence.

But what if we’re looking at this the wrong way?

On one level, it makes sense that we would look for computational intelligence that looks like human intelligence. The obsession with whether or not GPT-4o is passing the bar, more accurately diagnosing illnesses than doctors, and getting into business school is one symptom of a larger problem when it comes to discussing the singularity: it is us forming a thesis about markers of intelligence that we currently view as prestigious within our existing human society.

The internet has arguably enabled a rapid form of evolution within the human species. Stephen Hawking famously wrote about how the acceleration of information transmission far surpasses what can be done on a biological level alone through generations. Brains – organs within our bodies that hold seemingly endless potential for neuroplasticity and change – are now supplemented by silicon.

It makes sense, in a way, that we primarily think about intelligence through the lens of individual human accomplishment. The prominence of the belief in American exceptionalism in Silicon Valley is pervasive, with the billionaires running the valley viewing themselves as the definers of a new era where reality itself is redefined. Indeed, America was built on the principle of manifest destiny and the idea that the founders held a level of authority and intelligence over those they brutally colonized. Given that these ideals still run rampant (an ML engineer in San Francisco might make upwards of a million dollars a year; the data curators in South America are paid pennies for their time in Amazon Gift Cards), an ego-driven, totalitarian approach to owning intelligence makes sense.

Americans have the right to the freedom of speech, not the freedom of thought. The human mind and brain are still mysteries to us, and an understanding of true consciousness remains in the domain of the philosophical. Suggest in a meeting that the geopolitical impact of mass computational intelligence puts freedom of thought at risk, and you’re going to be the weird one in most companies.

Conflated in all of this is the idea that AGI or ASI (artificial super-intelligence) will look… well, singular. We think about these types of systems in the context of a single product offering or business entity, because that’s the metaphor that we have most readily available to us, and the one that most explicitly shapes the dependencies on our livelihoods. Corporations basically have more rights than people at this point, and the increased wealth concentration among a select few entities puts the majority of us in a position of dependency.

We’ve only recently started to explore and accept the idea that human cognition is not the unilateral exemplar of intelligence that we think it is. While much research into other forms of intelligence still have a tendency of using human capabilities as benchmarks, our increasing understanding of fungal intelligence hints at a more inclusive potential for us to evaluate forms of consciousness and patterns to explore within our computational endeavors.

Why, then, might we already be living through a technological singularity? Let’s look at the criteria, as outlined from our definition above:

- Technological growth is uncontrollable and irreversible

- The results have unforseeable human consequences

- An explosion of intelligence surpasses what humanity is capable of

Technological growth is uncontrollable and irreversible

Can you imagine what it would take to revert back from the internet? While there exist several pockets of cultures globally that remain relatively untouched from industrial and computational technology, we cannot “undo” our supply chain’s dependency on these advances. Individuals may be able to take a step back from existing in tandem with technology by removing smart home devices, giving up phones, or moving to a neo-luddite community, but the economic and geopolitical incentives of nations today demonstrate the impossibility of controlling and reversing our dependency on computational capabilities. We aren’t taking all of our satellites out of space. We can’t stop Elon from putting up more.

The results have unforseeable human consequences

The existence of the alignment problem within the field of artificial intelligence – ensuring that AI’s best interests are aligned with humanity’s best interests – implies, to me, that we are unable to see the vast range of human consequences. Tell an AI to fix climate change, and humanity is gone: we’re the root cause. We couldn’t foresee the impact that social media would have on manipulating elections (at least, not with enough clarity to stop it), and we weren’t predicting a mental health crisis to evolve hand-in-hand with “social” technologies. Media companies didn’t predict Twitch. Humans are not good at predicting the future. We don’t know what will happen next. Venture capitalists like to pretend that they can, but there’s a chicken and egg bias here: are they investing because they can see that the market will respond? Or does investing create the conditions necessary to create those future markets?

An explosion of intelligence surpasses what humanity is capable of

This is the hardest of the criteria to assess, because, as I’ve hinted at above, we are also pretty bad at understanding and recognizing “other” intelligences beyond our own. This happens on an individual and societal level, and no one seems to fundamentally be able to agree of what humanity is capable of as a collective to begin with. Telecommunication technologies has opened us up to an even broader range of individual thought and exposure to ideas than ever before, yet on average, we’re not spending our time on traditional learning activities:

- Globally, we spend an average of 2 hours and 23 minutes per day on social media

- Americans spend 3 hours and 47 minutes per day on average watching traditional television

- 56,784,901 hours of live streaming content were watched on Twitch alone on August 30, 2024

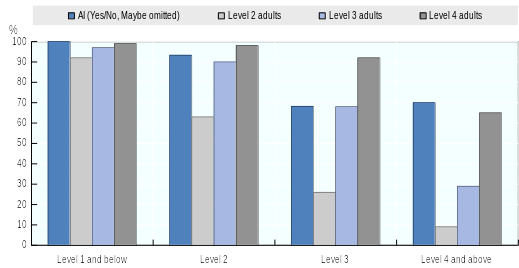

Mathematical literacy is closely tied to socioeconomic well-being. 100% of computers are mathematically literate. In 2018 – six years ago – AI was already capable of out-performing most humans on literary tasks, according to OCED.

Ultimately, I would argue that whether or not we exist within the singularity is not the important question to answer. Instead, we need to be working to understand how to exist in a cooperative and multi-dimensional manner, given the global impact and access that we now have as a species. There’s been no better time to look to and appreciate the intelligence that we see in each other and in the environmental world around us to draw upon as we face larger and larger problems. Computational algorithms will not remain biased towards humans for long, regardless of our attempts to align them. We arguably cannot even align ourselves towards the interests of the existing humans on this planet (or the planet). The interests of Silicon Valley do not reflect the interests of the world at large outside the vantage point of capitalism and (in)effective altruism. And “effective” altruism, in practice, is the lesser of two evils – not the “good” option compared to the bad.

Today’s investment and race for technological advancements at all costs reflects the imperial perspectives that have followed humanity throughout history. Perhaps instead of being the cockroach, we instead try to be the mycelia: individuals that exist within a network of cooperative, energy-sharing organisms that share resources and make decisions that transcend individual species. After all, the largest Armillaria – in Oregon’s Malheur National Forest – is over 2,500 years old.

If the ASI we build aligns with fungi, we’re probably screwed. Count me in on the side of the mushrooms.