Being a Pregnant Developer in the Age of AI is Weird

As I’m writing this, I’m wearing a green t-shirt with a giant eyeball over my rapidly growing stomach. It’s Halloween, and I’ve decided to dress up as Mike Wazowski – it feels like I’m all stomach these days, so it felt appropriate. My partner dressed up as Boo.

Halloween is an especially interesting time of year to reflect on identity and persona: it’s a holiday that encourages people to step into a different character and perhaps play a different role to one that we might have on a day to day basis. Truth be told, I’m reflecting a lot on identity in general these days, and as I approach my third trimester in my first pregnancy, the way that I think about myself has been changing quite a bit.

For the first ten years of my career, my identity centered my work, and my work centered around using immersive technology (games, virtual reality, metaverse technology) as a medium for self-discovery and expression. Through my work in immersive technology, I became acutely more aware of the sociological constructs through which we form a sense of self, community, and social norms. 3D as a playground for technological development provided me with a stronger sense of the importance of place, and how different environments shape our cognitive behaviors and can serve as an extension of ourselves. The convergence of spatial computing and machine learning has been present in my work for years, but I’ve recently started to refocus on language models and the relationship that we have to information.

If the first decade of my career was focused on identity through relationships to others and the world around me, I might hazard a guess that the next decade will be more innately focused on what comes “within”.

Working in and around the field of artificial intelligence used to be more straightforward for me. AI algorithms were served as API endpoints to achieve a wider means to an end within the virtual worlds that I built: to generate imagery on the fly to tell stories in High Fidelity, to live-caption and translate spoken language into text when people shared a space, to use primitive image recognition services to try and create a proof of concept for scene descriptions. I’d plug in a “cognitive service”, and off I’d go.

Recently, though, I’ve come to the realization that offloading these “cognitive services” to massive corporations isn’t the philosophy that I want to adopt for the rest of my life. Technology isn’t neutral, and we don’t have to accept a world that has been made more complicated than necessary.

And so, we persist forward in a journey to try and make complex technology that can help us navigate a complicated world. Artificial intelligence, in all of its challenges and opportunities, fundamentally pushes us to a place where we are thinking more critically about the very meaning of intelligence: who has it, what entities are capable of it, how we measure it, different ways of recognizing it. We’re “teaching sand to think”, in some capacity, ironically without figuring out how to effectively align on intelligence as a human species, first.

Or perhaps it is that desire to recognize, nurture, and tap into a network of expanded consciousness that motivates us along this path: one where we create both higher and lower levels of abstraction with which to explore our professional goals, our purpose, ways to resolve increasingly catastrophic human behaviors towards the planet and one another. For me, a new reason has emerged – to guide a new human being through the complexity that is life and existence.

As I read books on human development and parenting, I’m learning about the ways that human brains learn. I’m then sitting down to write code that turns my own computer into an aid for my personal thought processes, which forces me to both understand and articulate that meta-cognition to myself, and communicate it to other people through language, code, and the Ultimate Expression of Human Intelligence, slide decks. I participate in groups where my metaphors are grounded in creativity and the abstract, starkly contrasted with specific mathematical structures that define behaviors.

This past weekend, my partner and I built what I affectionately call my “local AI supercomputer”. It is not, by any sense of a 2023 definition, an Actual Supercomputer, but our standards for such things change. A supercomputer is measured in various magnitudes of FLOPS (depending on what decade you’re in, megaflops, gigaflops, teraflops, or petaflops) and this computer is pretty darn super to me. For a reference point, the dual 4090 GPUs that I have in my new AI computer (166 TFLOPS) has 30 more TFLOPs than IBM’s Blue Gene/L – IBM’s line of supercomputers in 2005 (136 TFLOPS).

I joked that it was a parenting exercise to build this PC with my partner. We seamlessly traded off components and tasks, sometimes exchanging non-verbal signals and shared context with one another to have a tool ready before the other needed it. He talked through steps and order of operations; I made decisions about budget, timeline, and software. He reassured me when I worried about power consumption and overheating.

Of course, raising human children is not the same as building AI systems. For one, my computer is never going to have the unique experience of kicking me from the inside of my body. However, the more we focus on “creating digital intelligence”, the more we will be forced to reconcile our own assumptions about the models and data that surround us, the origin of that information, the belief structures we hold, and how it all plays out to influence our behavior and manipulate the electrical impulses that run through our human brains.

What triggers an electrical pattern to find a new synapse to follow, to fire neurons down a previously unexplored path, and continue to do so? Over the course of a single day, the amount of new information that we can choose to take in can be massive. Some of it is noise, some of it is signal, and with the attention economy, all of it is monetized. Well, almost. I think there’s an antidote to that, and it’s bringing back technical and digital literacy to individuals. I have the computer power equivalent to what IBM produced for military and governments less than twenty years ago, available to me today. I do not need to keep relying on mega-corporations for my compute, unless they force me to (and I fear they are increasingly heading that direction).

Yeah, it was a pain in some ways to get off Windows and switch to Ubuntu, and I’m constantly told that I’m at risk of “falling behind” because I insist on learning how to build machine learning systems on my own machine instead of taking advantage of centralized cloud compute, but I don’t want to offload my thinking to mega-corporations. I don’t want my thoughts, experiences, and decisions to be sucked into an algorithm that no one can explain, trained on who-knows-what from the internet at large.

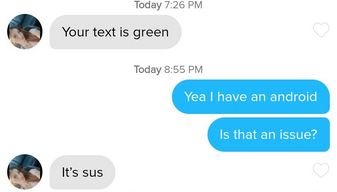

Do our tools of choice define our identity and our values? I would say so. That’s not to say that there should be immediate judgement of your phone choice, but it certainly can be a reflection of your world view on centralization, moderation, regulation, autonomy, agency…

So as I go down this rabbit hole of data, how we interact with systems who are learning to process, if not comprehend, language, with a presumed goal of creating contributing members of society… it’s hard to get out of my head, because there’s a lot going on there (and in the rest of my body, too.)