Eigenvectors as a Representation of Nuance? (Or, How I’m Re-Visiting all of my Freshman Year Math Classes To Explain my Brain) – Pt. 1

When I was a freshman at Virginia Tech, I thought I understood math at a high level. I knew that Calculus went on (I took 7 credits of math in high school, culminating with AP Calculus A) and that I’d be taking a lot of math as part of my Computer Science degree (I was one class short of a math minor) but when I entered the world of linear algebra, statistics, lambda calculus, and beyond, my brain calmly absorbed the material’s theory while failing entirely to understand how to apply math to other things. As I entered the world of 3D computing and computer graphics, I slowly began to see more real-world applications of complex mathematical forms and representations, but it still feels like a field where my understanding is sludgey and vastly incomplete.

In a conversation that I had with a co-worker today, we were talking about the challenges of a democratically organized business unit coming to consensus about complex topics. Often, the ways that organizations represent these is to create a mission statement or a series of values, articulating a position about an issue in a written statement. Building consensus requires a clear understanding of the various perspectives from a given group of individuals, and increasingly, building consensus requires understanding nuance even within our own individual frame of mind.

Recently, someone noted to me that we had only recently started to acknowledge, as humans, that there are an infinite number of states of “reality” – each of us has uniquely constructed a reality based on our lived experiences, and we exist in an increasingly global society where we have access to learn from and step into other lived versions of someone’s life. In many cases, this means shifting away from any right or wrong answer to a problem, and instead, having to explore new ways to communicate with additional nuance and context.

Math: The situation’s a lot more nuanced than that

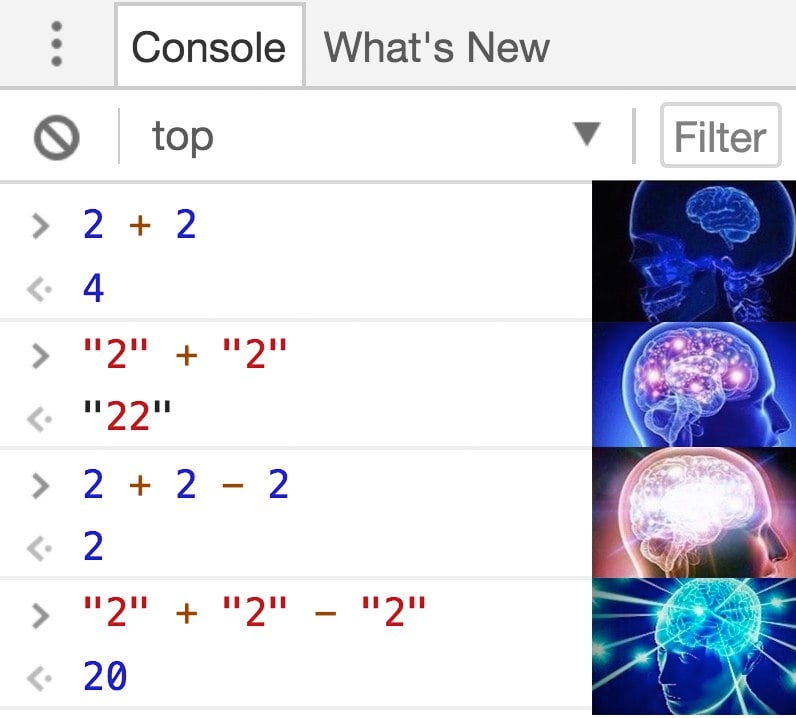

We are taught, at a young age, that the foundation of our mathematical system is basic arithmetic: 2+2 always equals four; zero added to anything will always equal the other number. Programming languages are built on top of mathematical principles, and can immediately show how context changes the outcome of a seemingly “absolute” answer:

In the image to the left, you can see how the addition of quotation marks changes the way that the program calculates two plus two. Using quotation marks in the second calculation is adding context to the Javascript programming language that this is not the “mathematical value” of a two, but is instead a “word representing a two”, and the addition of two numbers (without quotes) results in the addition of those two numbers, while the addition of two words (“strings”) results in sticking those two strings together. I can’t explain how the last one gets twenty, though.

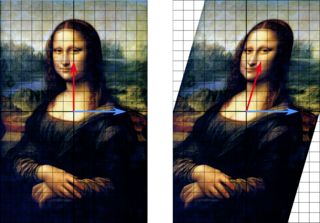

Over time, in college, I learned more math, including how to do things like multiple matrices and grids of numbers together. They have a lot of use in many forms of computing, especially in evaluating how changing the scale on a particular set of dimensions effects an overall system of values.

The picture to the right shows an example of a “shear” transformation. Each square on the grid can be represented by a numeric pairing (e.g. a pair of 1 square up, 1 square to the right) and by applying a scalar to the red axis (in this case, three blocks), all of the subsequent squares are adjusted. The blue arrow is an eigenvector, a particular type of vector that does not change the underlying data values.

If I’ve learned one thing in business school, it’s that you can make up a grid of any two principles, and plot items along the two axes in order to make a point. This is really helpful if you can distill your problem space down to two variables with quantifiable “high” and “low” behaviors that result in sufficiently different outcomes: for example, the ‘Eisenhower Matrix’ is a specific type of 2×2 that uses the variables of ‘urgency’ vs. ‘importance’ to come up with four potential combinations: urgent and important tasks, which you need to “do”, not urgent but important tasks (“schedule”), not important but urgent (“delegate), or not important, not urgent tasks (“delete”).

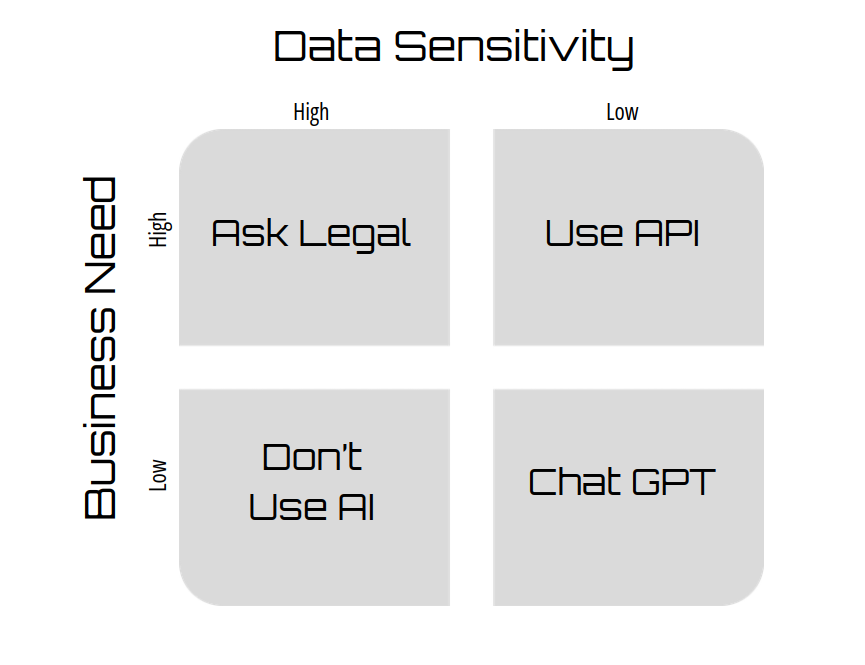

One decision that many organizations may be making right now is how to develop a corporate policy about artificial intelligence. There are many, many tensions at play between various priorities and values held around this technology, often shifting based on whether or not an organization is looking at the problem from the perspective of ethical use, existential capabilities, economic impact, driving force to labor, how employees should engage. A company might choose to make a policy around AI using a two by two matrix that plots “data sensitivity” and “business need”.

For illustrative purposes only. Please do not use this as a mechanism for decision-making around AI and if you do, don’t blame me.

This can be a helpful way to start breaking down a concept, but it is grossly simplifying many aspects of representation. It very quickly becomes difficult for humans to mentally map on a third axis (e.g. cost), but the number of options you have in that case goes from 4 to 9! You have more than double the amount of choices you can make by creating a third dimension to this type of chart, resulting in quite a bit more nuance at the expense of complexity.

What if we can slightly reduce the complexity in thinking about how we represent decisions and values with eigenvalues and eigenvectors, rather than adding another full dimension? Could, perhaps, an eigenvalue be calculated against a matrix of perspectives within an organization, to represent a new form of communicating the nuance and fluid nature of these complex, multi-cellular entities in which we house business endeavors?

Let’s try an experiment!

To evaluate this idea, I took a small (9 person) survey of team members and asked them to share their perspectives on AI innovation. Each person answered two questions on a scale of 1-5:

- When it comes to AI, companies should err on the side of being open (1) or safe (5)

- When it comes to AI, companies should err on the side of moving slower (1) or faster (5)

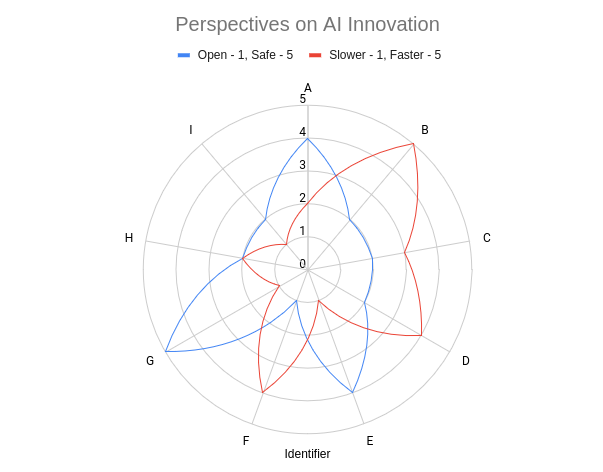

The radar chart below shows the responses. Points near the center of the graph represent slow (red) and open (blue), while points near the edge of the graph represent fast (red) and and safe (blue). You can see that this particular sample generally believes that companies should err on the side of openness, rather than safety – though the outliers have strongly-held opinions. Less clearly unified within this group is whether companies should move faster or slower, but it does end up being slightly in favor of moving more slowly.

It’s worth noting that there is an inherent assumption seen here: no one here simultaneously rates ‘safety’ as a priority and ‘faster’ as a need. This is fascinating to me, because it’s often not the takeaway that we might hear when listening to casual conversation, and it’s worth calling out that conversations about strategy often are grounded in dialog and not a quantitative evaluation across an organization.

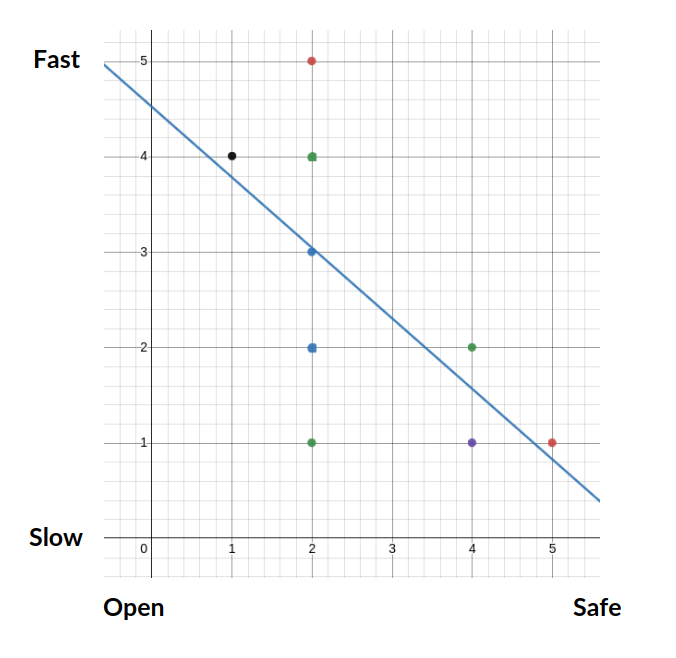

If you can forgive my lazy graphing skills, you can see these same points plotted on a typical 2D graph, where the x-axis represents the ‘open-safety’ scale and the y-axis represents the speed of development. If we calculate a function to represent speed of development as a function of openness and safety, we get f(x) = 4.52381 – 0.73895x, plotted as a blue line on the graph to the left. We might then suggest that this could be interpreted as the sample’s “value” function for openness, safety, and speed of development when it comes to artificial intelligence.

So… eigenvalues? Eigenvectors?

This is where I admittedly hit a wall in my explorations from a time-investment perspective. While I can generally remember pieces of my differential equations courses, when it came time to sit down and actually ‘draw the rest of the owl’ here, I tapped out. In theory, my mind is telling me that there is a way to calculate eigenvalues for each set of responses against the function of the organization, as a form of showing a relationship between the individual and organizational responses. In practice, it’s been too long since I did this type of math. To be continued.

Why bother trying to make these associations at all?

In the age of multidimensional computing, we are being forced to reconcile our traditional binary way of thinking into a more complex space. The internet has allowed us to create increasingly complex systems of organization and existence. The global evolution of our social groups and economies paired with increased access to compute power is forcing us to advance our language, vocabulary, and thinking about the way that we evaluate and act upon information. It is fun to try and connect mathematical concepts to social ones.

With artificial intelligence, we are seeing a renaissance of the polymath. There is a breaking down and reforming happening in the roles and responsibilities that an individual can play within their communities. Technological advancements and knowledge of how to work with these tools enable far more exploration into scientific and artistic realms than was previously made available to human brains in the past. It is critical that we don’t stop exploring these threads of intersection and representation, because those exercises open us up to new and interesting ideas.

I’m especially interested in studying the relationship between the self to the “other”. I’ve written, at times, about the concept of mitsein (“being-with”) and mitwelt (“with the world”) – although where, I don’t know, because I have historically suffered from terrible digital hygiene – and today, I’m getting to add another German word to my list of philosophical motivators – eigenwelt (“the mental realm in which we know ourselves”).